Category Archives: practice

Deploy (always running) Jupyter (ipython) Notebook server (remote access) in Linux auto start when you start your machine (using supervisor in Linux)

I use Ubuntu and it works. red colored words can be run in shell $ command

first, you need the ipython, you can pip, like $pip install jupyter notebook, if you don’t have pip, install it using $apt-get install python-pip

But I use Anaconda, I guess its the basic for a data scientist using Python, even an amateur, just go to their website https://www.continuum.io/downloads

in Linux shell, choose a directory you’d like to save your download files, wget the installer.

e.g. $wget https://repo.continuum.io/archive/Anaconda2-4.4.0-Linux-x86_64.sh

https://repo.continuum.io/archive/Anaconda2-4.4.0-Linux-x86_64.sh

then install, but you have to make your downloaded file executable (don’t forget the ‘.’ before ‘/’) $ ./chmod 777 Anaconda2-4.4.0-Linux-x86_64.sh

run the installer $ ./Anaconda2-4.4.0-Linux-x86_64.sh

Next, set up notebook

- generate config file for the notebook : in shell $ jupyter notebook – – generate-config

/home/iri/.jupyter/jupyter_notebook_config.py

remember the path of yours machine returns, will be used later

- to get a encrypted password, open ipython $ ipython

In [1]: from notebook.auth import passwd In [2]: passwd() Enter password: Verify password: Out[2]: 'sha1:a1a7e6611365:4db3c012ed2dc11a6348c7a79d9e881e6992fc07'

save the ‘sha1:a…’ in somewhere, like in a txt to be used later, exit()

- edit the config generated in the earlier step (use vi, vim, nano …), e.g $ vim /home/iri/.jupyter/jupyter_notebook_config.py

c = get_config() c.IPKernelApp.pylab = 'inline' c.NotebookApp.ip='*' c.NotebookApp.password = 'sha1:ce23d945972f:34769685a7ccd3d08c84a18c63968a41f1140274' c.NotebookApp.open_browser = False c.NotebookApp.port = 1314 #any port you like c.NotebookApp.notebook_dir = '/home/notebook' #the directory you set, where jupyter notebook starts

save and exit

- find your machine’s ip using $ ifconfig

- to verify, execute $jupyter notebook

keep $jupyter notebook running, open a browser on another machine, put url, http://(the ip of your server machine):1314 e.g. in internal network (machines using the same wifi), http://192.168.1.112:1314, enter password (the password you input earlier in ipython to get ‘sha1:…’, the you will land to your jupyter notebook.

if you are deploying a server, e.g. in AWS, you can access your jupyter notebook from anywhere of our planet, by inputting your server’s public IP and the port you set for the notebook, e.g. mine is http://shichaoji.com:520

Here why mine starts from https:// , because I use ssh certificate, it is optional but using certificate will be safer, especially deploying to public

note: if encounter error like Permission denied: ‘/run/user/1000/jupyter’

solution: $ unset XDG_RUNTIME_DIR

optional, add a certificate (from http to https)

- in shell, do $ openssl req -x509 -nodes -days 365 -newkey rsa:1024 -keyout mycert.pem -out mycert.pem

you can press ‘enter’ through the end, and it will generate a certificate file mycert.pem

- copy the generated certificate file to a directory $ cp mycert.pem /(some_ directory), e.g. $ cp mycert.pem /home/iri/.jupyter

- add another line to the config file, e.g. , $ nano/home/iri/.jupyter/jupyter_notebook_config.py

c.NotebookApp.certfile=u'/home/iri/.jupyter/mycert.pem'

- run notebook $ jupyter notebook

- go from another computer (proceed any security warning), https://192.168.1.112:1314

Deployment, auto start when you start your machine, better do below as root, if will be running forever in servers like in AWS

- install supervisor, as root or sudoer, $ apt-get install supervisor

- go to the config directory of supervisor (where it installed), $ cd /etc/supervisor/conf.d/

- create and edit a config file : $ vim deploy.conf (name whatever but with .conf)

[program:notebook] command = /home/iri/anaconda2/bin/jupyter notebook user = iri # the user you install jupyter notebook directory = /home/notebook autostart = true autorestart = true logfile= /home/notebook/book.log # log

command —- the directory you install python/command

user —- the user you install python in the earlier step

directory —- the same directory in the jupyter notebook config file, where it starts

autostart —- run jupyter notebook when the machine starts

autorestart —- restart jupyter notebook if the program fails

- final step, in shell, as root, run $ service supervisor restart

- you can check status as root , run $ supervisorctl, then command supervisor> status, supervisor> help to get commands

10 Days of Statistics my solution

Environment: Python2

Day 0: Mean, Median, and Mode

# Enter your code here. Read input from STDIN. Print output to STDOUT wtf=raw_input() wtf2=raw_input() print wtf,type(wtf) print wtf2, type(wtf2) # Your Output (stdout) 10 <type 'str'> 64630 11735 14216 99233 14470 4978 73429 38120 51135 67060 <type 'str'>

my solution

def quartile_1(l):

return sorted(l)[int(len(l) * .25)]

def median(l):

return sorted(l)[len(l)/2]

def quartile_3(l):

return sorted(l)[int(len(l) * .75)]

li=wtf2.split()

ll=[float(i) for i in li]

def mean(x):

return sum(x)/len(x)

def median(x):

x.sort()

while len(x)>2:

x=x[1:-1]

return sum(x)/len(x)

def mode(x):

x=[int(i) for i in li]

dic={}

for i in x:

dic[i]=0

for i in x:

dic[i]+=1

m=max(dic.items(), key=lambda x: x[1])[1]

c=[]

for i in dic.items():

if i[1]==m:

c.append(i[0])

return min(c)

print mean(ll)

print median(ll)

print mode(ll)

Environment: R

# Enter your code here. Read input from STDIN. Print output to STDOUT

x <- suppressWarnings(readLines(file("stdin")))

x <- strsplit(x,' ')

x <- lapply(x,as.numeric)[[2]]

#print(x)

print(mean(x))

print(median(x))

getmode <- function(v) {

uniqv <- unique(v)

uniqv[which.max(tabulate(match(v, uniqv)))]

}

print(min(x))

Day 0: Weighted Mean

# Enter your code here. Read input from STDIN. Print output to STDOUT

c=raw_input()

a=raw_input()

b=raw_input()

# Input (stdin)

# 5

# 10 40 30 50 20

# 1 2 3 4 5

a=[float(i) for i in a.split(' ')]

b=[float(i) for i in b.split(' ')]

up = [i*j for i,j in zip(a,b)]

print round(sum(up)/sum(b),1)

Day 1: Quartiles

Sample Input

9

3 7 8 5 12 14 21 13 18

Sample Output

6

12

16Explanation

Lower half (L): 3, 5, 7, 8

Upper half (U): 13, 14, 18, 21

def quartile_1(l):

return sorted(l)[int(len(l) * .25)]

def median(l):

return sorted(l)[len(l)/2]

def quartile_3(l):

return sorted(l)[int(len(l) * .75)]

# Enter your code here. Read input from STDIN. Print output to STDOUT

y=raw_input()

x=raw_input()

y=int(y)

x=[int(i) for i in x.split(' ')]

x.sort()

if y%2==0:

print median(x[:x.index(median(x)[1])+1])[0]

else:

print median(x[:x.index(median(x)[1])])[0]

print(median(x)[0])

if y%2==0:

print median(x[x.index(median(x)[2]):])[0]

else:

print median(x[x.index(median(x)[2])+1:])[0]

if y%2==0:

print median(x[:x.index(median(x)[1])+1])[0]

else:

print median(x[:x.index(median(x)[1])])[0]

print(median(x)[0])

if y%2==0:

print median(x[x.index(median(x)[2]):])[0]

else:

print median(x[x.index(median(x)[2])+1:])[0]

Or

# Enter your code here. Read input from STDIN. Print output to STDOUT

y=raw_input()

x=raw_input()

y=float(y)

x=[int(i) for i in x.split(' ')]

x.sort()

if y%2==1:

m=round(y/2)

l=(m-1)/2

r=m+l

m,l,r=int(m),int(l),int(r)

print (x[l-1]+x[l])/2

print x[m-1]

print (x[r-1]+x[r])/2

if y%2==0:

m=y/2

l=round(m/2)

r=m+l

m,l,r=int(m),int(l),int(r)

if m%2==0:

print (x[l-1]+x[l])/2

print (x[m-1]+x[m])/2

print (x[r-1]+x[r])/2

else:

print x[l-1]

print (x[m-1]+x[m])/2

print x[r-1]

Day 1: Standard Deviation

Sample Input

5

10 40 30 50 20

Sample Output

14.1# Enter your code here. Read input from STDIN. Print output to STDOUT

y=raw_input()

y=int(y)

x=raw_input()

x=[int(i) for i in x.split(' ')]

x.sort()

m=sum(x)/float(len(x))

square=map(lambda x:(x-m)**2, x)

mu=(sum(square)/len(x))**0.5

print mu

Python iterators, loading data in chunks with Pandas

Python iterators

loading data in chunks with pandas

Iterators, load file in chunks¶

Iterators vs Iterables¶

an iterable is an object that can return an iterator¶

- Examples: lists, strings, dictionaries, file connections

- An object with an associated iter() method

- Applying iter() to an iterable creates an iterator

an iterator is an object that keeps state and produces the next value when you call next() on it.¶

- Produces next value with next()

a=[1,2,3,4]

b=iter([1,2,3,4])

c=iter([5,6,7,8])

print a

print b

print next(b),next(b),next(b),next(b)

print list(c)

Iterating over iterables¶

- Python 2 does NOT work

range() doesn't actually create the list; instead, it creates a range object with an iterator that produces the values until it reaches the limit

If range() created the actual list, calling it with a value of 10^100 may not work, especially since a number as big as that may go over a regular computer's memory. The value 10^100 is actually what's called a Googol which is a 1 followed by a hundred 0s. That's a huge number!

- calling range() with 10^100 won't actually pre-create the list.

# Create an iterator for range(10 ** 100): googol

googol = iter(range(10 ** 100))

Iterating over dictionaries¶

a={1:9, 'what':'why?'}

for key,value in a.items(): print key,value

Iterating over file connections¶

f = open('university_towns.txt')

type(f)

iter(f)

iter(f)==f

next(f)

next(iter(f))

# Create a list of strings: mutants

mutants = ['charles xavier', 'bobby drake', 'kurt wagner', 'max eisenhardt', 'kitty pride']

# Create a list of tuples: mutant_list

mutant_list = list(enumerate(mutants))

# Print the list of tuples

print(mutant_list)

print

# Unpack and print the tuple pairs

for index1, value1 in enumerate(mutants):

print(index1, value1)

print "\nChange the start index\n"

for index2, value2 in enumerate(mutants, start=3):

print(index2, value2)

Using zip¶

zip(), which takes any number of iterables and returns a zip object that is an iterator of tuples.¶

- If you wanted to print the values of a zip object, you can convert it into a list and then print it.

- Printing just a zip object will not return the values unless you unpack it first.

In Python 2 , zip() returns a list¶

Docstring: zip(seq1 [, seq2 [...]]) -> [(seq1[0], seq2[0] ...), (...)]

Return a list of tuples, where each tuple contains the i-th element from each of the argument sequences. The returned list is truncated in length to the length of the shortest argument sequence.

aliases = ['prof x', 'iceman', 'nightcrawler', 'magneto', 'shadowcat']

powers = ['telepathy','thermokinesis','teleportation','magnetokinesis','intangibility']

# Create a list of tuples: mutant_data

mutant_data = list(zip(mutants, aliases, powers))

# Print the list of tuples

print(mutant_data)

print

# Create a zip object using the three lists: mutant_zip

mutant_zip = zip(mutants, aliases, powers)

# Print the zip object

print(type(mutant_zip))

# Unpack the zip object and print the tuple values

for value1, value2, value3 in mutant_zip:

print(value1, value2, value3)

Loading data in chunks¶

- There can be too much data to hold in memory

- Solution: load data in chunks!

- Pandas function: read_csv()

- Specify the chunk: chunksize

import pandas as pd

from time import time

start = time()

df = pd.read_csv('kamcord_data.csv')

print 'used {:.2f} s'.format(time()-start)

print df.shape

df.head(1)

explore¶

a=pd.read_csv('kamcord_data.csv',chunksize=4)

b=pd.read_csv('kamcord_data.csv',iterator=True)

a.next()

x=a.next()

y=a.next()

y.append(x, ignore_index=True)

pd.concat([x,y], ignore_index=True)

1st way of loading data in chunks¶

start = time()

c=0

for chuck in pd.read_csv('kamcord_data.csv',chunksize=50000):

if c==0:

df=chuck

c+=1

else:

df=df.append(chuck, ignore_index=True)

c+=1

print c

print 'used {:.2f} s'.format(time()-start)

print df.shape

df.head(1)

2ed way of loading data in chunks¶

start = time()

want=[]

for chuck in pd.read_csv('kamcord_data.csv',chunksize=50000):

want.append(chuck)

print len(want)

df=pd.concat(want, ignore_index=True)

print 'used {:.2f} s'.format(time()-start)

print df.shape

df.head(1)

3rd way of loading data in chunks¶

start = time()

want=[]

f = pd.read_csv('kamcord_data.csv',iterator = True)

go = True

while go:

try:

want.append(f.get_chunk(50000))

except Exception as e:

print type(e)

go = False

print len(want)

df=pd.concat(want, ignore_index=True)

print 'used {:.2f} s'.format(time()-start)

print df.shape

df.head(1)

Processing large amounts of Twitter data by chunks¶

import pandas as pd

# Import package

import json

# Initialize empty list to store tweets: tweets_data

tweets_data = []

# Open connection to file

h=open('tweets.txt','r')

# Read in tweets and store in list: tweets_data

for i in h:

try:

print 'O',

tmp=json.loads(i)

tweets_data.append(tmp)

except:

print 'X',

h.close()

t_df = pd.DataFrame(tweets_data)

print

print t_df.shape

t_df.to_csv('tweets.csv',index=False, encoding= 'utf-8')

t_df.head(1)

Processing large amounts of data by chunks¶

# Initialize an empty dictionary: counts_dict

counts_dict = {}

# Iterate over the file chunk by chunk

for chunk in pd.read_csv('tweets.csv', chunksize=10):

# Iterate over the column in dataframe

for entry in chunk['lang']:

if entry in counts_dict.keys():

counts_dict[entry] += 1

else:

counts_dict[entry] = 1

# Print the populated dictionary

print(counts_dict)

Extracting information for large amounts of Twitter data¶

- reusable

- def func

# Define count_entries()

def count_entries(csv_file, c_size, colname):

"""Return a dictionary with counts of

occurrences as value for each key."""

# Initialize an empty dictionary: counts_dict

counts_dict = {}

# Iterate over the file chunk by chunk

for chunk in pd.read_csv(csv_file, chunksize=c_size):

# Iterate over the column in dataframe

for entry in chunk[colname]:

if entry in counts_dict.keys():

counts_dict[entry] += 1

else:

counts_dict[entry] = 1

# Return counts_dict

return counts_dict

# Call count_entries(): result_counts

result_counts = count_entries('tweets.csv', 10, 'lang')

# Print result_counts

print(result_counts)

install docker in Linux: one line check ports usage

$ curl -sSL https://get.docker.com/ | sh

https://docs.docker.com/install/linux/docker-ce/centos/#install-docker-ce

resources

https://github.com/shichaoji/docker-cheat-sheet

check ports usage

sudo netstat -nlp | grep 80

ECDFs stat Empirical cumulative distribution functions

In this exercise, you will write a function that takes as input a 1D array of data and then returns the x and y values of the ECDF. ECDFs are among the most important plots in statistical analysis. You can write your own function, foo(x,y) according to the following skeleton:

def foo(a,b):

"""State what function does here"""

# Computation performed here

return x, y

The function foo() above takes two arguments a and b and returns two values x and y. The function header def foo(a,b): contains the function signaturefoo(a,b), which consists of the function name, along with its parameters.

Define a function with the signature ecdf(data). Within the function definition,

- Compute the number of data points,

n, using thelen()function. - The xx-values are the sorted data. Use the

np.sort()function to perform the sorting. - The yy data of the ECDF go from

1/nto1in equally spaced increments. You can construct this usingnp.arange()and then dividing byn. - The function returns the values

xandy.

def ecdf(data):

"""Compute ECDF for a one-dimensional array of measurements."""

# Number of data points: n

n=len(data)

# x-data for the ECDF: x

x=np.sort(data)

# y-data for the ECDF: y

y=np.arange(1,n+1)/n

return x, y

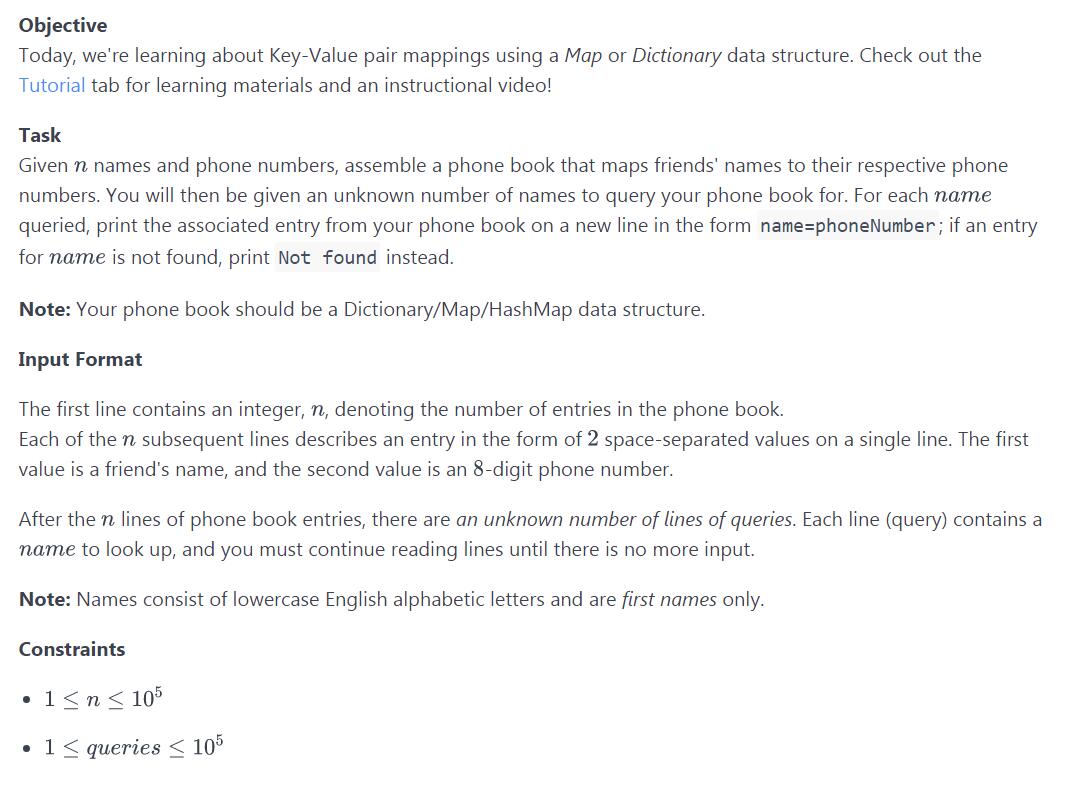

30 days of code https://www.hackerrank.com

Day 6

Task

Given a string,S , of length N that is indexed from 0 to N-1, print its even-indexed and odd-indexed characters as 2 space-separated strings on a single line (see the Sample below for more detail).

Note: is considered to be an even index.

Sample Input

2

Hacker

Rank

Sample Output

Hce akr

Rn akMy code

# Enter your code here. Read input from STDIN. Print output to STDOUT

x=int(raw_input())

for i in range(x):

d=raw_input()

a='';b=''

for i in range(0,len(d),2):

a+=d[i]

print a,

for i in range(1,len(d),2):

b+=d[i]

print b,

print ''

official answer:

t = int(raw_input())

for _ in range(t):

line = raw_input()

first = ""

second = ""

for i, c in enumerate(line):

if (i & 1) == 0:

first += c

else:

second += c

print first, second

Day 8

Sample Input

3

sam 99912222

tom 11122222

harry 12299933

sam

edward

harry

Sample Output

sam=99912222

Not found

harry=12299933my answer

# Enter your code here. Read input from STDIN. Print output to STDOUT

x=raw_input()

y={}

for i in range(int(x)):

k=raw_input()

y[k.split(' ')[0]]=k.split(' ')[-1]

while True:

try:

c=raw_input()

except:

c=0

if c:

try:

print c+'='+y[c]

except:

print 'Not found'

else:

break

official

Python3

import sys

# Read input and assemble Phone Book

n = int(input())

phoneBook = {}

for i in range(n):

contact = input().split(' ')

phoneBook[contact[0]] = contact[1]

# Process Queries

lines = sys.stdin.readlines()

for i in lines:

name = i.strip()

if name in phoneBook:

print(name + '=' + str( phoneBook[name] ))

else:

print('Not found')

Day 12: Inheritance

class Person:

def __init__(self, firstName, lastName, idNumber):

self.firstName = firstName

self.lastName = lastName

self.idNumber = idNumber

def printPerson(self):

print "Name:", self.lastName + ",", self.firstName

print "ID:", self.idNumber

class Student(Person):

def __init__(self, firstName, lastName, idNum ,scores):

Person.__init__(self, firstName, lastName, idNum)

self.scores=scores

def calculate(self):

avg = sum(scores) / len(scores)

grade = ''

if (90 <= avg <= 100):

grade = 'O'

if (80 <= avg < 90):

grade = 'E'

if (70 <= avg < 80):

grade = 'A'

if (55 <= avg < 70):

grade = 'P'

if (40 <= avg <= 55):

grade = 'D'

if (avg < 40):

grade = 'T'

return grade

line = raw_input().split()

firstName = line[0]

lastName = line[1]

idNum = line[2]

numScores = int(raw_input()) # not needed for Python

scores = map(int, raw_input().split())

s = Student(firstName, lastName, idNum, scores)

s.printPerson()

print "Grade:", s.calculate()

Day 14: Scope

The absolute difference between two integers

class Difference:

def __init__(self, a):

self.__elements = a

# Add your code here

def computeDifference(self):

self.maximumDifference = max([a-b for a in self.__elements for b in self.__elements])

# End of Difference class

_ = raw_input()

a = [int(e) for e in raw_input().split(' ')]

d = Difference(a)

d.computeDifference()

print d.maximumDifference

Day 15: Linked List

class Node:

def __init__(self,data):

self.data = data

self.next = None

class Solution:

def display(self,head):

current = head

while current:

print current.data,

current = current.next

def insert(self,head,data):

if (head == None):

head = Node(data)

else:

current = head

while True:

if(current.next == None):

current.next = Node(data)

break

current = current.next

return head

mylist= Solution()

T=int(input())

head=None

for i in range(T):

data=int(input())

head=mylist.insert(head,data)

mylist.display(head);