Visualization

1. A simple interactive data visualization web app created and deployed on AWS

embedded in this page

3. A Image Crawler I wrote with GUI and executable (no programming env need), scroll down scroll down

original address

in 2014, I’ ve written a Python image crawler for a specific website Cure WorldCosplay, a website that attracts cosplayers all over the world post their own pictures . Which has about 10k active members and up to 10 million pictures posted. Packaged as GUI (graphical user interface) & into a single .exe file. No need python or any other programs install. The pros is the program is packaged into a single executable file, no programming environment needed. But some virus detection software could report unsafe file.

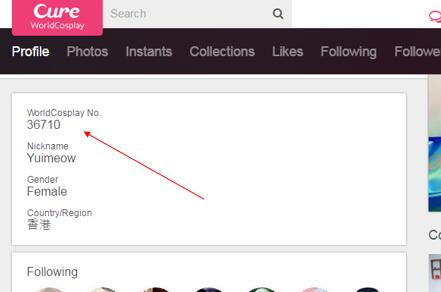

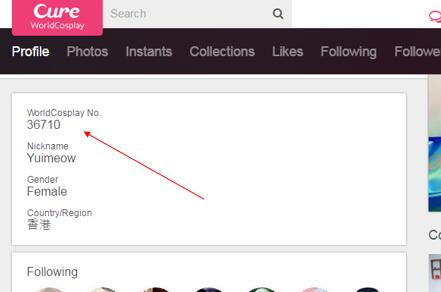

Theoretically, if you have enough disk space, you can download all the pics of that website (about 9800 Gigabyte), the only limit is your bandwidth. I have deployed 36 crawlers on a Linux server at the same time, they download pics 24/7 at the maximum internet bandwidth. How to use it: The program will direct you to the ranking page of the Cure WorldCosplay, so you can browse around, then select a coser you like, and who’s coser ID will be displayed her/his page. Copy that WorldCosplay No. and type it into the program, and the program will download all this coser’s HD photos in no time (on average each coser have about 100 pics).

Moreover, the software will generate a Index local HTML file that display all the

downloaded images in thumbnail view, that allow you browse around and click to see HD picture.

Source code is here.

To make the packaged file as small as possible, I did not use much external libraries except an imaging library Pillow. I tried to use library Scrapy to get pics online more efficiently and PyQt to make prettier interface, but the packaged size (to .exe file) would be much larger and had potential dependency problems. So I then stick with python default libraries: urllib multiprocessing, and Tkinter.

The time was 2014, I just picked up Python, before long I was obsessed with it for its simple and powerful. At the time I was doing a research project and then in no time I realized that Python can do anything:

The program will direct you to the ranking page of the Cure WorldCosplay, so you can browse around, then select a coser you like, and who’s coser ID will be displayed her/his page. Copy that WorldCosplay No. and type it into the program, and the program will download all this coser’s HD photos in no time (on average each coser have about 100 pics).

Moreover, the software will generate a Index local HTML file that display all the

downloaded images in thumbnail view, that allow you browse around and click to see HD picture.

Source code is here.

To make the packaged file as small as possible, I did not use much external libraries except an imaging library Pillow. I tried to use library Scrapy to get pics online more efficiently and PyQt to make prettier interface, but the packaged size (to .exe file) would be much larger and had potential dependency problems. So I then stick with python default libraries: urllib multiprocessing, and Tkinter.

The time was 2014, I just picked up Python, before long I was obsessed with it for its simple and powerful. At the time I was doing a research project and then in no time I realized that Python can do anything:

embedded in this page

2. My Tableau Visualization Viz on Tableau Public Gallery

3. A Image Crawler I wrote with GUI and executable (no programming env need), scroll down scroll down

original address

3. A Image Crawler I wrote

in 2014, I’ ve written a Python image crawler for a specific website Cure WorldCosplay, a website that attracts cosplayers all over the world post their own pictures . Which has about 10k active members and up to 10 million pictures posted. Packaged as GUI (graphical user interface) & into a single .exe file. No need python or any other programs install. The pros is the program is packaged into a single executable file, no programming environment needed. But some virus detection software could report unsafe file.

Here the program is! |

Click names below for download |

With interface |

Without interface |

Theoretically, if you have enough disk space, you can download all the pics of that website (about 9800 Gigabyte), the only limit is your bandwidth. I have deployed 36 crawlers on a Linux server at the same time, they download pics 24/7 at the maximum internet bandwidth. How to use it:

The program will direct you to the ranking page of the Cure WorldCosplay, so you can browse around, then select a coser you like, and who’s coser ID will be displayed her/his page. Copy that WorldCosplay No. and type it into the program, and the program will download all this coser’s HD photos in no time (on average each coser have about 100 pics).

Moreover, the software will generate a Index local HTML file that display all the

downloaded images in thumbnail view, that allow you browse around and click to see HD picture.

Source code is here.

To make the packaged file as small as possible, I did not use much external libraries except an imaging library Pillow. I tried to use library Scrapy to get pics online more efficiently and PyQt to make prettier interface, but the packaged size (to .exe file) would be much larger and had potential dependency problems. So I then stick with python default libraries: urllib multiprocessing, and Tkinter.

The time was 2014, I just picked up Python, before long I was obsessed with it for its simple and powerful. At the time I was doing a research project and then in no time I realized that Python can do anything:

The program will direct you to the ranking page of the Cure WorldCosplay, so you can browse around, then select a coser you like, and who’s coser ID will be displayed her/his page. Copy that WorldCosplay No. and type it into the program, and the program will download all this coser’s HD photos in no time (on average each coser have about 100 pics).

Moreover, the software will generate a Index local HTML file that display all the

downloaded images in thumbnail view, that allow you browse around and click to see HD picture.

Source code is here.

To make the packaged file as small as possible, I did not use much external libraries except an imaging library Pillow. I tried to use library Scrapy to get pics online more efficiently and PyQt to make prettier interface, but the packaged size (to .exe file) would be much larger and had potential dependency problems. So I then stick with python default libraries: urllib multiprocessing, and Tkinter.

The time was 2014, I just picked up Python, before long I was obsessed with it for its simple and powerful. At the time I was doing a research project and then in no time I realized that Python can do anything:

- Python scrapy, selenium: scraping user info from social media & business website

- Python pandas, matplotlib: data cleansing and exploratory analysis

- Python gensim, scikit-learn: text sentiment analysis, topic modeling

- Python scikit-learn, graphlab create, xgboost: machine learning

- Python Flask: deploy interactive websites